How to Build an ABM Program for Developer Tools (That Actually Works)

Account-based marketing still works in technical verticals - but you need to make some tweaks.

If you're selling developer tooling, traditional ABM methods won't work. Engineers don't spend their time in the places that typical B2B buyers do. Rather than posting on LinkedIn, they're asking for feedback on Slack, Reddit, and Discord, and they'd rather try something than hear a pitch about it. In this article, we break down how to set up an ABM program to reach elusive technical buyers.

Key Takeaways

- Traditional ABM doesn't work for developer tools. Engineers avoid LinkedIn and sales calls, so you need to find them on Slack, Reddit, and GitHub, or at technical events.

- Three signal types work together. Account intelligence tells you who owns decisions. Technographics tell you which accounts fit your product. Intent signals tell you who's ready to buy right now. Each is necessary; none is sufficient alone.

- Volume is the challenge. Manually monitoring developer communities, tracking job postings, and mapping org structures is impossible at scale. Onfire let you track 50M+ engineers across 100K+ sources.

- Relevance beats automation. Your edge is reaching out about problems prospects have already mentioned, not sending better-written cold emails.

- Measure what matters. Track speed (time-to-touch), quality (win rates by signal type), and coverage (% of accounts with recent signals).

Why ABM Is Different for Developer Tools

Traditional B2B buyers may be difficult to land, but at least you know where to look for them. With developer tooling, that changes. The engineers using your product typically don't like talking to salespeople, and they tend to be allergic to LinkedIn. That puts sales teams in a bind.

On the one hand, engineers would rather try something than hear a sales pitch about it. That's why the vast majority of successful engineering-centric companies offer a free tier or trial. PLG has become table stakes in developer tooling. But product-led growth leaves too much up to chance. Developers may sign up for a free trial, but are they motivated to champion your product before a buying committee? Who knows.

On the other hand, if you leave the engineers out of the equation entirely, buyers have little reason to trust your messaging. They'll need some evidence that your tooling will work before committing to an enterprise plan. And while case studies are great, the most trustworthy evidence can only come from their own devs.

The path forward is reaching technical buyers with messages so relevant that they'll want to hear more. Intent signals make that possible.

Finding Technical Buyers Where They Actually Are

Firmographics and technographics give you a foundation. Company size, industry, and tech stack all will help you build a target account list. However, they don't tell you who's ready to buy right now.

For that, you need to go where developers actually spend their time.

Developer communities are goldmines. Engineers ask questions on Slack, debate tooling choices on Reddit, and collaborate on GitHub. When someone posts in a DevOps Slack channel asking "has anyone used [competitor] for CI/CD pipelines?", that's a signal worth acting on. The same goes for Reddit threads comparing security tools or Discord discussions about migration pain points.

Of course, you could theoretically join every relevant Slack workspace and monitor every subreddit, but no team has that kind of bandwidth - which is why automation is key.

Technical events reveal timing. Conferences like Re:Invent, BlackHat, or Gartner events attract buyers who are actively evaluating solutions. But the signal isn't just attendance, it's also what people say before and after.

Someone posting "heading to BlackHat next week, looking to connect with security and DevOps folks" is telling you exactly where their head is at. Even smaller events matter. Luma dinners, meetup RSVPs, and local tech gatherings all indicate active engagement with a problem space.

OSS contributions and job postings. A GitHub org that's suddenly active in a monitoring-related repo is likely building observability. Similarly, a company hiring Kubernetes engineers is probably scaling their container infrastructure.

These signals are public, but connecting them to specific accounts and contacts requires de-anonymizing usernames and mapping them to real people, which is something that's nearly impossible to do manually.

The real advantage comes from combining these signals. For example, if you’ve found a senior engineer at a target account who just posted about CI/CD frustrations, attended a DevOps meetup, and works at a company hiring platform engineers, you’ve got yourself a warm lead.

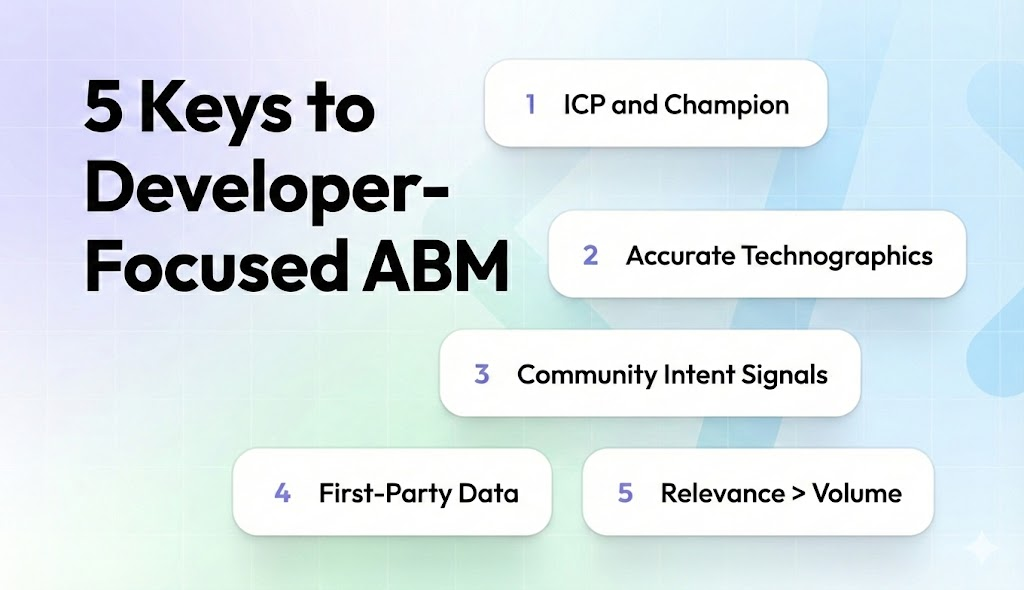

5 Key Features of an ABM Program for Developer Tools

The signals above are the raw ingredients. Turning them into an ABM program requires a system to capture, connect, and act on them systematically. Here's what that looks like in practice.

1. Identify Your Champion Through Behavior, Not Just Title

Job titles are notoriously unreliable for finding technical champions. "Staff Engineer" and "Principal Engineer" can mean vastly different things at different companies. The person who owns observability decisions might report to the VP of Engineering, or they might be three levels down with outsized influence. Org charts show reporting lines, not decision-making power.

To find who actually drives technical choices, you need to build a richer picture of the account by layering data from different sources - job postings, community signals, first party data - and other smaller signals that together can help you build an accurate picture of how technology decisions are made. The goal is to identify the specific person who owns the infrastructure initiative you're selling into - not just folks with "DevOps Engineer” in their title.

How Onfire can help: Onfire's Account Intelligence Graph maps 50M+ engineers, and the system is customized per-client to surface the individuals who actually own technical decisions - not just those with the right title.

2. Accurate Technographics are Crucial

Technographics matter more when selling to developers than almost any other audience. A company's tech stack determines whether they're even a fit - and shapes how you position against alternatives. If you're selling a Kubernetes security tool, you need companies running Kubernetes in production. If you're selling observability, knowing whether a prospect uses Datadog, Splunk, or a homegrown stack changes everything about your pitch.

The problem is that most technographic data is unreliable. Traditional providers rely on front-end web scraping and keywords in job posts. By the time your BDR reaches out about their Jenkins environment, the team has already migrated to GitHub Actions. And even when the data is current, it's often too coarse: knowing a company uses AWS tells you almost nothing. You need to know which services they run, what they're actively investing in, and where the gaps are.

Real-time signals - what a company is hiring for, what repos they're contributing to, what tools their engineers are discussing -paint a far more accurate picture than any static database.

How Onfire can help: Onfire offers the most up-to-date and accurate technographic data, specifically when it comes to software infrastructure - backend tools, security, cloud services. This is derived from data collection infrastructure and vertical AI that were both built specifically to “understand” the true picture of an account’s tech stack.

3. Track Intent Signals Across Communities and Events

Engineers don't announce buying intent on LinkedIn. They ask questions on Slack, debate tooling choices on Reddit, and post about conference plans on Twitter. A Reddit thread comparing WAF providers, a Slack message asking "has anyone migrated off Datadog?", or a post saying "heading to KubeCon, looking to connect with observability folks"—these are the signals that indicate someone is actively evaluating.

The challenge is scale: developers scatter their activity across hundreds of communities, and most usernames are anonymized. Manually monitoring even a handful of relevant Slack workspaces and subreddits would consume your entire team's bandwidth—and you'd still miss the connection between that helpful Reddit commenter and the prospect in your CRM.

How Onfire can help: Onfire monitors 100K+ sources including Slack, Discord, Reddit, GitHub, and event platforms, using AI-powered identity resolution to connect anonymous activity to real contacts at real companies.

4. Use Your First-Party Data

Third-party signals are powerful, but they lack context. Did that engineer who just posted about CI/CD frustrations also sign up for your free trial last month? Is their company already in your CRM from a previous conversation? A Reddit post alone is interesting. A Reddit post from someone whose company trialed your product, is now hiring for the role that uses your category, and has a competitor renewal coming up - that's a lead you should call today.

Most teams already have this data - after all, developers love a hands-on evaluation - but it’s scattered across CRM, marketing automation, and product analytics. The work of connecting it to external signals either doesn't happen or happens too slowly to matter.

How Onfire can help: Onfire creates a unified layer that fuses your CRM and product analytics with third-party signals, surfacing prioritized opportunities directly in Salesforce with the full context of why.

5. Reach Out with Relevance, Not Volume

Everyone can send semi-personalized emails to thousands of prospects now. Anyone can spin up an AI agent that scrapes data from a prospect’s LinkedIn account and includes that in an email. Developers can spot the pattern from a mile away - and their filters are tuned accordingly.

Your edge isn't going to come from dropping the prospect’s high school in the subject line. But it might come from reaching people about problems they've already expressed. When you can reference a specific Slack question or Reddit post, you're not cold-calling—you're responding to a signal they put out. "I saw you asked about alternatives to Datadog in the CloudNative Slack last week" gets a reply; "I see you’re connected with John Doe” will likely get you blocked.

How Onfire can help: Onfire surfaces the specific posts, events, and activity tied to each contact, giving your BDRs the context to write outreach that actually resonates - without 15 minutes of research per prospect.

The Metrics That Matter for ABM

Signal-based ABM requires different measurements than traditional campaigns. Here are some key metrics along with the questions they help you answer.

Speed metrics

- Signals to contact touch (within 24h): What percentage of signals get acted on quickly? Stale signals lose value fast.

- Time-to-first-touch: How long between a signal appearing and your team reaching out?

- Time-to-meeting: Are signals actually accelerating the pipeline?

Quality metrics

- Reply rate: Are signal-based outreach messages getting better responses than cold outreach?

- Meeting/SQL rate by signal type: Which signals actually convert? (Measure each separately: high-intent community posts vs. event attendance vs. job postings.)

- Win rate from signal-sourced opportunities vs. baseline: Do signal-sourced deals close at higher rates?

Coverage metrics

- % of target accounts with any signal in the last 30/60/90 days: Are you actually seeing signals from your ICP, or are there gaps in your visibility?

- Pipeline $ from signal-sourced opportunities: What's the revenue impact?

The goal is to build a feedback loop. If certain signal types consistently lead to higher win rates, weight them more heavily. If others generate meetings that don't convert, deprioritize them.

See What a Custom-Tuned ABM Program Looks Like

Looking to launch an ABM program for developer tools but don’t know where to start? Talk to an Onfire expert to learn how you can refine your ICP, find your potential champions, and launch outbound campaigns based on data that actually adds relevance. Start here

FAQ

Why do ABM programs often fail for developer-led products?

Programs fail when they apply B2B marketing conventions to an audience that actively avoids them. Most ABM playbooks assume buyers are reachable through LinkedIn ads and sales calls. However, engineers prefer trying tools themselves and distrust traditional sales tactics. To succeed, you have to meet developers where they actually spend time.

How do teams avoid sounding promotional when targeting developers?

Reference specific problems they've mentioned publicly, such as a Reddit post, a conference talk, or a community question. Don't pitch features; acknowledge the challenge they're facing and offer to help. Developers have finely tuned BS detectors, so relevance and authenticity matter more than polish.

What role does product usage play in ABM for developer tools?

Product usage signals (free trials, API calls, feature adoption) are among the strongest intent indicators. When combined with external signals like community activity or event attendance, they help identify who's genuinely evaluating versus casually browsing. That’s why the best ABM programs layer first-party usage data with third-party intent signals.

How should sales engage after developer interest is detected?

Lead with the signal itself: "I noticed you asked about X in [community]" or "I saw you're attending [conference]." Offer something useful like a relevant case study, a technical comparison, or a direct line to engineering. Avoid jumping straight to demo requests; earn the conversation first.

When does ABM make sense versus product-led growth alone?

PLG works well for self-serve deals but leaves enterprise revenue on the table. ABM makes sense when deal sizes justify the effort, when technical champions need help navigating internal buying committees, or when you're targeting specific accounts that won't find you organically. Most mature dev-tool companies run both motions in parallel.

.webp)

.webp)